蜗牛星际a单自修改引导,硬盘理顺,隐藏ssd,支持休眠

基于jun1.04b修改

原版引导里面会显示16个硬盘位,强迫症感觉难受,于是下载原版引导,在ubuntu环境下修改了硬盘位,并隐藏ssd。

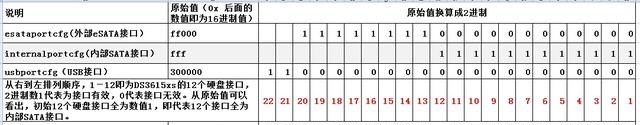

原理:

把硬盘位改为4个,并且倒着计数,这样ssd的硬盘位就变成5了,但因为系统硬盘位只有4个,就实现了隐藏ssd,如果硬盘位上下颠倒,把机箱里面接口交换即可。

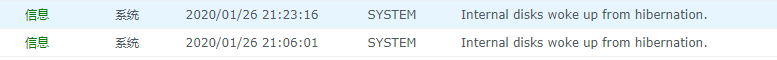

休眠功能正常

原理:

我这里修改了internalportcfg为0xf,这在二进制下为1111,1代表接口有效,意味着有4个内置硬盘接口

maxdisk修改为4

原理图:

效果图:

以下是参考资料:原作者autohintbot,来源XPEnology Community

This seems like hard-to-find information, so I thought I'd write up a quick tutorial. I'm running XPEnology as a VM under ESXi, now with a 24-bay Supermicro chassis. The Supermicro world has a lot of similar-but-different options. In particular, I'm running an E5-1225v3 Haswell CPU, with 32GB memory, on a X10SLM+-F motherboard in a 4U chassis using a BPN-SAS2-846EL1 backplane. This means all 24 drives are connected to a single LSI 9211-8i based HBA, flashed to IT mode. That should be enough Google-juice to find everything you need for a similar setup!

The various Jun loaders default to 12 drive bays (3615/3617 models), or 16 drive bays (DS918+). This presents a problem when you update, if you increase maxdisks after install--you either have to design your volumes around those numbers, so whole volumes drop off after an update before you re-apply the settings, or just deal with volumes being sliced and checking integrity afterwards.

Since my new hardware supports the 4.x kernel, I wanted to use the DS918+ loader, but update the patching so that 24 drive bays was the new default. Here's how. Or, just grab the files attached to the post.

Locating extra.lzma/extra2.lzma

This tutorial assumes you've messed with the synoboot.img file before. If not, a brief guide on mounting:

Install OSFMount

"Mount new" button, select synoboot.img

On first dialog, "Use entire image file"

On main settings dialog, "mount all partitions" radio button under volumes options, uncheck "read-only drive" under mount options

Click OK

You should know have three new drives mounted. Exactly where will depend on your system, but if you had a C/D drive before, probably E/F/G.

The first readable drive has an EFI/grub.cfg file. This is what you usually customize for i.e. serial number.

On the second drive, should have a extra.lzma and extra2.lzma file, alongside some other things. Copy these somewhere else.

Unpacking, Modifying, Repacking

To be honest, I don't know why the patch exists in both of these files. Maybe one is applied during updates, one at normal boot time? I never looked into it.

But the patch that's being applied to the max disks count exists in these files. We'll need to unpack them first. Some of these tools exist on macOS, and likely Windows ports, but I just did this on a Linux system. Spin up a VM if you need. On a fresh system you likely won't have lzma or cpio installed, but apt-get should suggest the right packages.

Copy extra.lzma to a new, temporary folder. Run:

lzma -d extra.lzma

cpio -idv < extra

In the new ./etc/ directory, you should see:

jun.patch

rc.modules

synoinfo_override.conf

Open up jun.patch in the text editor of your choice.

Search for maxdisks. There should be two instances--one in the patch delta up top, and one in a larger script below. Change the 16 to a 24.

Search for internalportcfg. Again, two instances. Change the 0xffff to 0xffffff for 24. This is a bitmask--more info elsewhere on the forums.

Open up synoinfo_override.conf.

Change the 16 to a 24, and 0xffff to 0xffffff

To repack, in a shell at the root of the extracted files, run:

(find . -name modprobe && find . \! -name modprobe) | cpio --owner root:root -oH newc | lzma -8 > ../extra.lzma

Not at the resulting file sits one directory up (../extra.lzma).

Repeat the same steps for extra2.lzma.

Preparing synoboot.img

Just copy the updated extra/extra2.lzma files back where they came from, mounted under OSFMount.

While you're in there, you might need to update grub.cfg, especially if this is a new install. For the hardware mentioned at the very top of the post, with a single SAS expander providing 24 drives, where synoboot.img is a SATA disk for a VM under ESXi 6.7, I use these sata_args:

for 24-bay sas enclosure on 9211 LSI card (i.e. 24-bay supermicro)

set sata_args='DiskIdxMap=181C SataPortMap=1 SasIdxMap=0xfffffff4'

Close any explorer windows or text editors, and click dismount all in OSFMount. This image is ready to use.

If you're using ESXi and having trouble getting the image to boot, you can attach a network serial port to telnet in and see what's happening at boot time. You'll probably need to disable the ESXi firewall temporarily, or open port 23. It's super useful. Be aware that the 4.x kernel no longer supports extra hardware, so network card will have to be officially supported. (I gave the VM a real network card via hardware passthrough).

引导下载地址: https://pan.baidu.com/s/1tvgZrRFSBQhVFXyM_sRh5g

提取码: ghw1

ps如果已经安装过了,直接在pe下把2个lzma文件和grub.cfg放入引导u盘或ssd相应位置即可,不需要重新安装系统,然后修改下/etc.defaults/synoinfo.conf,把internalportcfg修改为为0xf,把maxdisk修改为4即可,数据无价,谨慎操作!

谢谢,学习了~~~~~~·

在PE模式下如何找到2个lzma文件和grub.cfg放入引导u盘或ssd相应位置即可

我输入:login as: admin

[email protected]'s password:

Could not chdir to home directory /var/services/homes/admin: No such file or directory

admin@DS918Plus:/$ ^M^M

-sh: $'\r^M': command not found

admin@DS918Plus:/$ vi /etc.defaults/synoinfo.conf

# system options

timezone="Pacific"

language="def"

maillang="enu"

codepage="enu"

defquota="5"

defshare="public"

defgroup="users"

defright="writeable"

configured="no"

pswdprotect="no"

autoblock_expriedday="0"

autoblock_attempts="0"

autoblock_attempt_min="0"

supportphoto="yes"

support_download="yes"

supportquota="yes"

supportitunes="yes"

supportddns="yes"

supportfilestation="yes"

这个文件很长,我截取一段

support_ha="yes"

iscsi_target_type="lio4x"

wol_enabled_options="g"

HddEnableDynamicPower="yes"

support_ssd_cache="yes"

support_performance_event="yes"

internalportcfg="0xffff"

max_ha_spacecount="64"

cache_support_skip_seq_io="yes"

max_btrfs_snapshots="65536"

把internalportcfg修改为为0xf,把maxdisk修改为4,vi可能没有显示完全,把它下载到本地修改,下载提供的文件,把镜像提取出lzma文件,pe下面放入引导u盘分区